Recent advances in text-to-image models have opened new frontiers in human-centric generation. However, these models cannot be directly employed to generate images with consistent newly coined identities. In this work, we propose CharacterFactory, a framework that allows sampling new characters with consistent identities in the latent space of GANs for diffusion models. More specifically, we consider the word embeddings of celeb names as ground truths for the identity-consistent generation task and train a GAN model to learn the mapping from a latent space to the celeb embedding space. In addition, we design a context-consistent loss to ensure that the generated identity embeddings can produce identity-consistent images in various contexts. Remarkably, the whole model only takes 10 minutes for training, and can sample infinite characters end-to-end during inference. Extensive experiments demonstrate excellent performance of the proposed CharacterFactory on character creation in terms of identity consistency and editability. Furthermore, the generated characters can be seamlessly combined with the off-the-shelf image/video/3D diffusion models. We believe that the proposed CharacterFactory is an important step for identity-consistent character generation.

Overview of the proposed CharacterFactory. (a) We take the word embeddings of celeb names as ground truths for identity-consistent generation and train a GAN model constructed by MLPs to learn the mapping from $z$ to celeb embedding space. In addition, a context-consistent loss is designed to ensure that the generated pseudo identity can exhibit consistency in various contexts. \(s^*_1~s^*_2\) are placeholders for \(v^*_1~v^*_2\). (b) Without diffusion models involved in training, IDE-GAN can end-to-end generate embeddings that can be seamlessly inserted into diffusion models to achieve identity-consistent generation.

More identity-consistent character generation results by the proposed CharacterFactory.

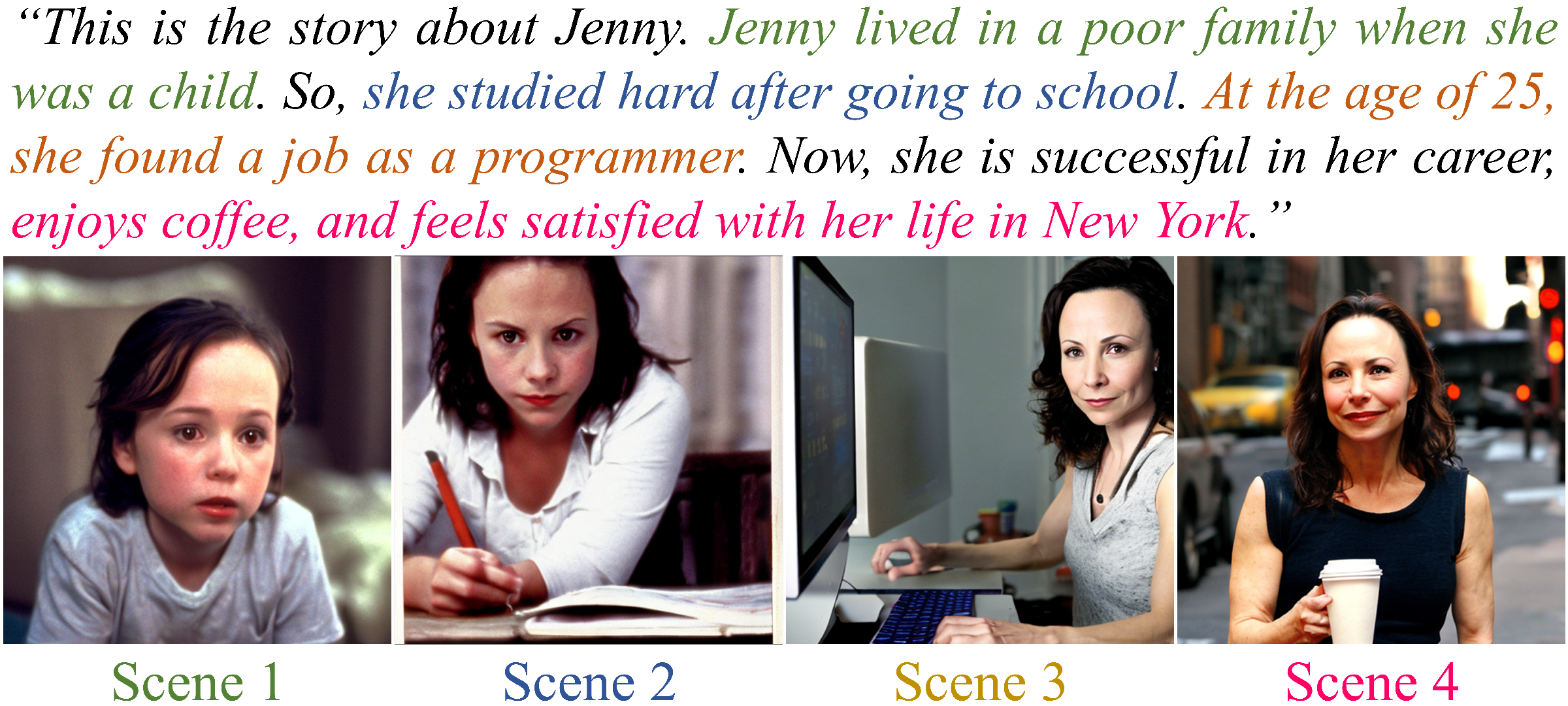

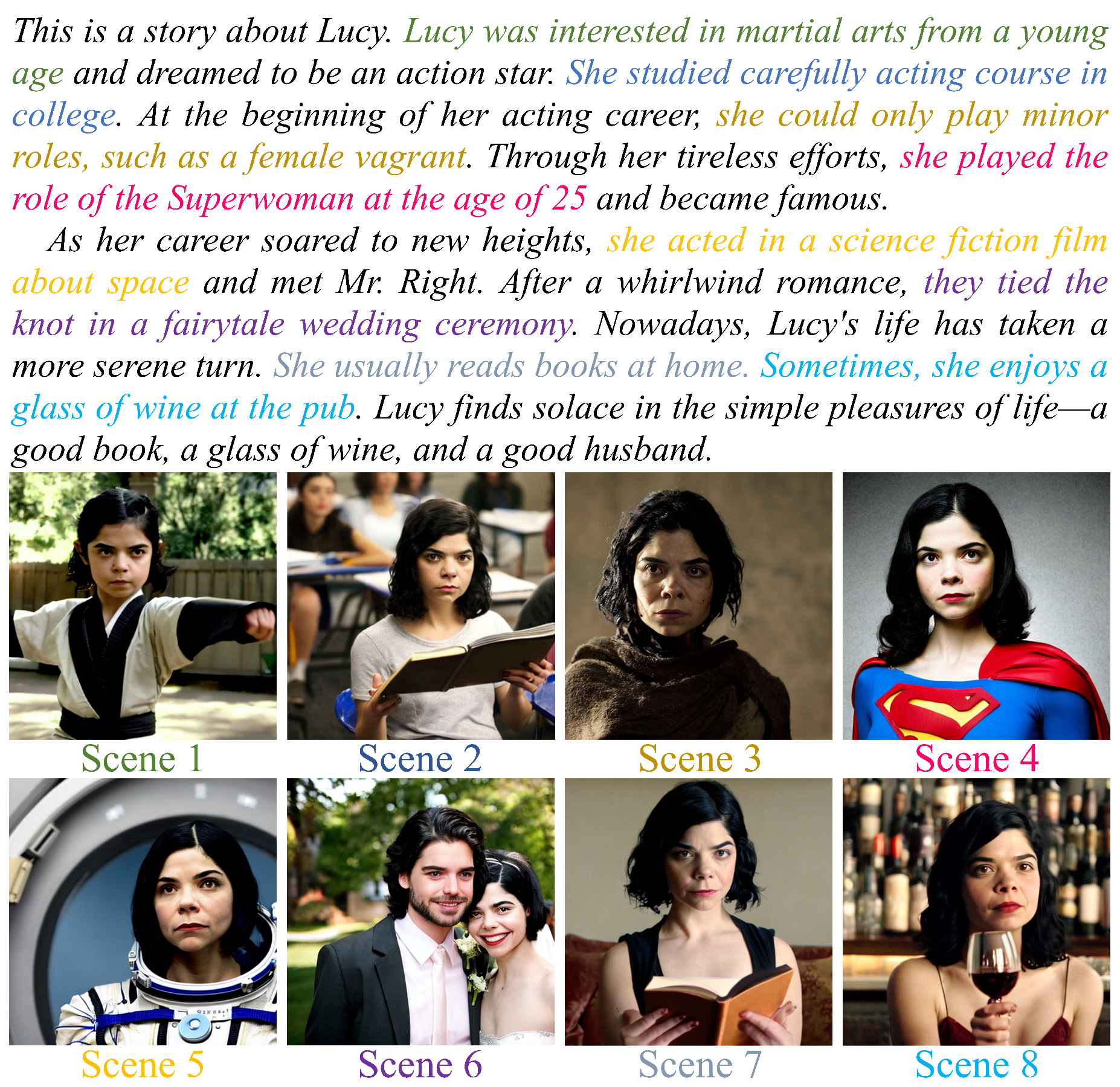

The proposed CharacterFactory can illustrate a story with the same character.

We provide more identity-consistent illustrations for a longer continuous story to further demonstrate the availability of this application.

We conduct linear interpolation between randomly sampled "\(z_1\)" and "\(z_2\)", and generate pseudo identity embeddings with IDE-GAN. To visualize the smooth variations in image space, we insert the generated embeddings into Stable Diffusion via the pipeline of Figure 2(b). The experiments in row 1, 3 are conducted with the same seeds, and row 2, 4 use random seeds.

More identity-consistent Image/Video/3D generation results by the proposed CharacterFactory with ControlNet, ModelScopeT2V and LucidDreamer.

@article{wang2025characterfactory,

title={Characterfactory: Sampling consistent characters with gans for diffusion models},

author={Wang, Qinghe and Li, Baolu and Li, Xiaomin and Cao, Bing and Ma, Liqian and Lu, Huchuan and Jia, Xu},

journal={IEEE Transactions on Image Processing},

year={2025},

publisher={IEEE}

}